Edge Computing and Carbon Reduction

Edge Computing and Carbon Reduction

How Proximity-Based Processing Lowers Energy Use

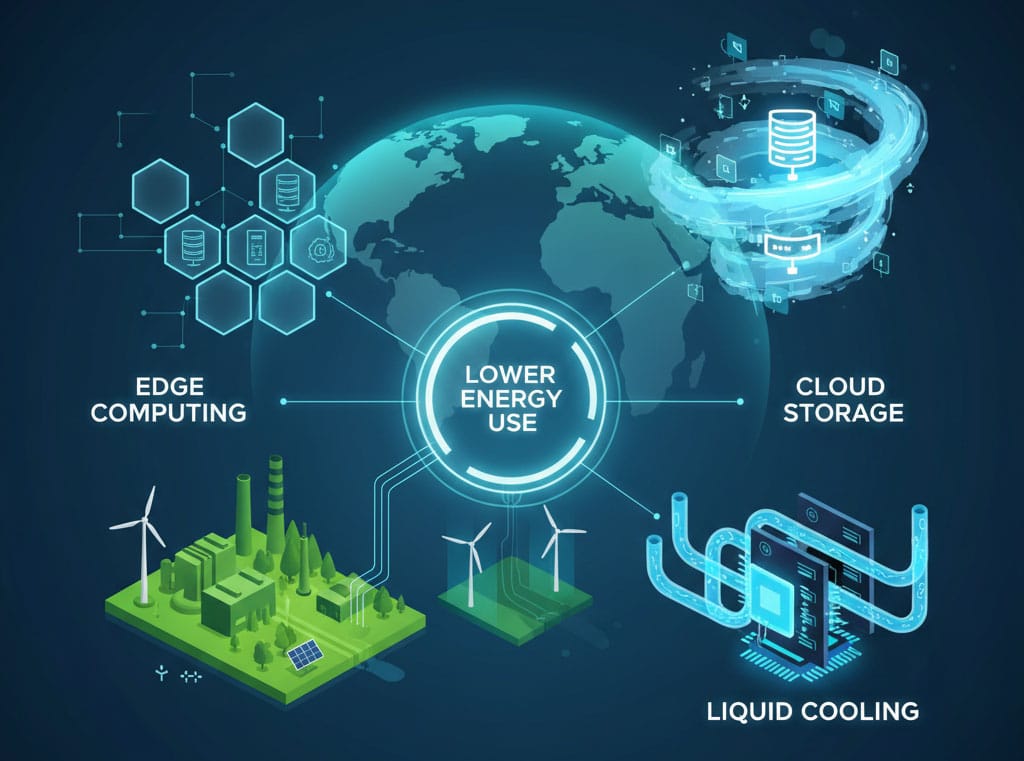

Edge computing reduces carbon emissions by processing data closer to its source, cutting energy-intensive long-distance data transfers while improving speed and efficiency. With hybrid, S3-compatible storage solutions and carbon-aware deployment models, it offers a practical path to greener, more sustainable IT infrastructure.

Data-Driven World

Organisations constantly stream, store, and analyse vast volumes of data. Traditional models rely on centralised cloud data centres, often far from where data originates. But a powerful alternative gaining momentum is edge computing – processing data closer to its source.

Beyond reducing latency and bandwidth costs, edge computing can significantly reduce carbon emissions by minimising the energy spent on data travel.

Why Distance Matters for Carbon Emissions

Every time data moves long distances – from edge devices, sensors, or user terminals to distant cloud centres – there is energy consumed in transmission over network infrastructure. Backbone networks, routers, long-haul links, and peering exchanges all contribute to the energy cost of data movement. By reducing this distance – or even eliminating cross-regional hops – edge computing lowers the volume of data that must traverse energy-intensive networks.

As noted by STL Partners, edge computing “reduces energy consumption in networks, by reducing the total amount of data traversing the network.” At scale, that can translate into nontrivial carbon reductions across entire enterprise fleets.

How Edge Computes More Efficiently

- Localised Processing – With edge, raw data is processed near its origin—on gateways, small servers, micro data centres, or embedded “edge nodes.” Only distilled results or aggregated summaries may need to be sent to the central cloud. This reduces redundant data transfer and the energy cost of repeated transmissions.

- S3-Compatible Storage Placement – Many edge deployments now leverage S3-compatible solutions with regional or edge buckets, ensuring data stays close to users and applications. This not only enhances access speeds but also reduces long-haul data traffic and its associated carbon cost.

- Model Optimisation – Edge AI systems often use lighter, optimised models to run inference. According to one source, edge AI implementations can save 65% to 80% energy compared to fully cloud-based AI, largely by cutting data transmission overhead.

- Carbon-Aware Workload Placement – Frameworks like CarbonEdge exploit spatial carbon intensity variations to shift workloads among edge nodes in ways that minimise emissions while maintaining latency, showing reductions up to 78.7% in some scenarios.

- Hybrid Edge – Cloud Cooperation – Not all tasks fit at the edge—some require heavy compute or storage capacity. Hybrid models allow intelligent partitioning: delay-insensitive workloads are sent to the cloud, while latency-sensitive tasks stay local. This optimises both carbon and performance.

Quantitative Estimates & Industry Evidence

Studies in manufacturing environments suggest up to 40% energy savings when using edge computing vs relying purely on cloud models. Other modelling work indicates decentralised architectures (edge + cloud + S3-compatible storage) may yield 19–28% savings over centralised clouds in some settings.

Moreover, as cloud data centres scale, total global data centre energy use is projected to rise sharply. The growth of edge computing offers a path to moderate that trend by distributing load and reducing redundant transfers.

Challenges & Design Considerations

The efficiency benefits of edge are not guaranteed—they depend heavily on system architecture, hardware efficiency, and workload partitioning. Some challenges include:

- Power reliability at edge locations – Nodes in remote locations may rely on less stable power.

- Hardware optimisation & scheduling – Devices must be optimised for low power operation with smart load balancing.

- Rebound or demand expansion – More responsive computing can increase demand, offsetting gains.

- Lifecycle emissions – Manufacturing and disposal of distributed edge devices add carbon cost.

- Coordination complexity – Hybrid, carbon-aware orchestration across many nodes remains technically demanding.

The Path Forward

Edge computing is more than a performance optimisation—it is an emerging lever in sustainable IT. By combining edge nodes with S3-compatible regional storage, organisations can keep data near its source, minimise carbon-intensive transfers, and still leverage cloud scalability. As frameworks like CarbonEdge and GreenScale mature, carbon-aware deployment will enable enterprises to reduce energy footprints without compromising responsiveness.

In a world where digital infrastructure is increasingly measured by environmental impact, deploying compute and storage closer to their point of use is a strategy with both technical and climate upside.